本文对NLP预训练模型做了一个全面的总结 Pre-trained Models for Natural Language Processing: A Survey

解决NLP问题的深度学习网络一般有: convolutional neural networks (CNNs), recurrent neural networks (RNNs), graph-based neural networks (GNNs), attention mechanism.

Pre-trained Models for NLP (PTMs) 总览

PTMs的优势

- 从大语料库中学到通用的representations,用以帮助下游任务

- PTMs提供了更好的模型初始参数

- 可以看做一个正则化,防止在小数据集上overfitting

PTMs的发展

第一代 PTMs:

这些模型context-free而且不能捕捉文本的高层表示, 如 polysemous disambiguation, syntactic structures, semantic roles, anaphora

第二代PTMs:

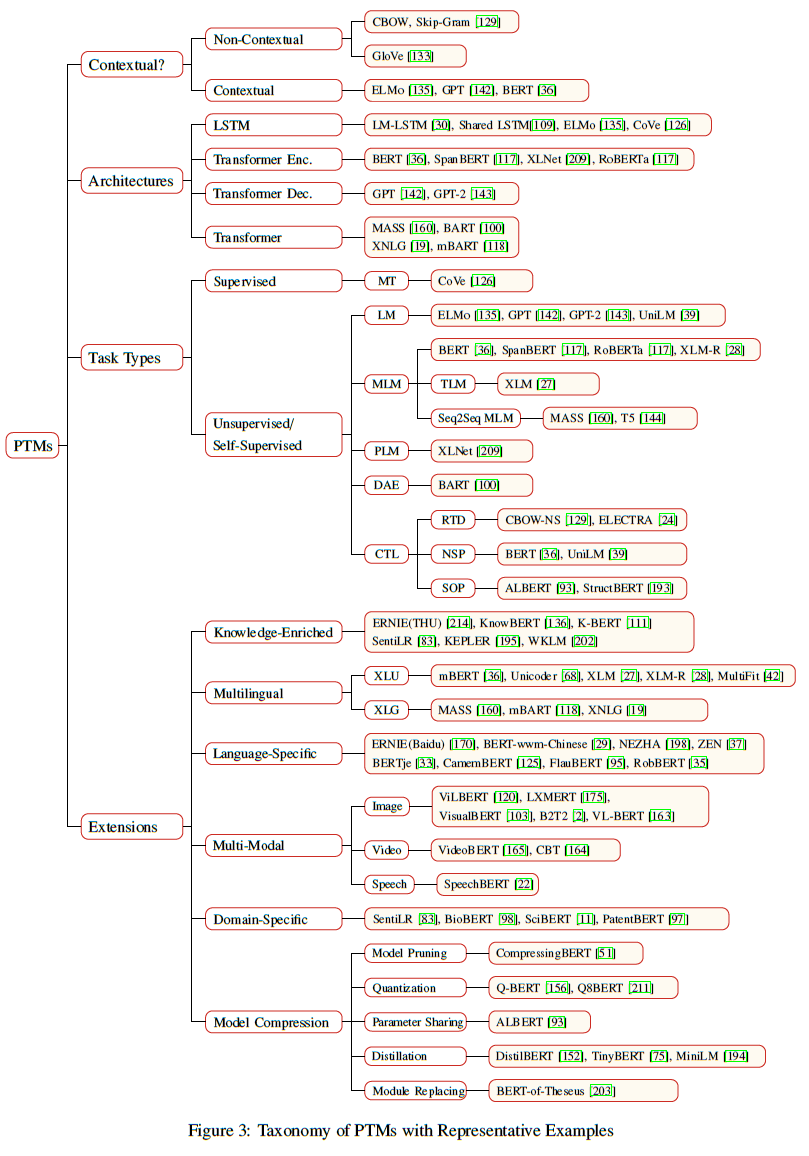

PTMs分类

Representation type

- non-contextual models

- contextual models

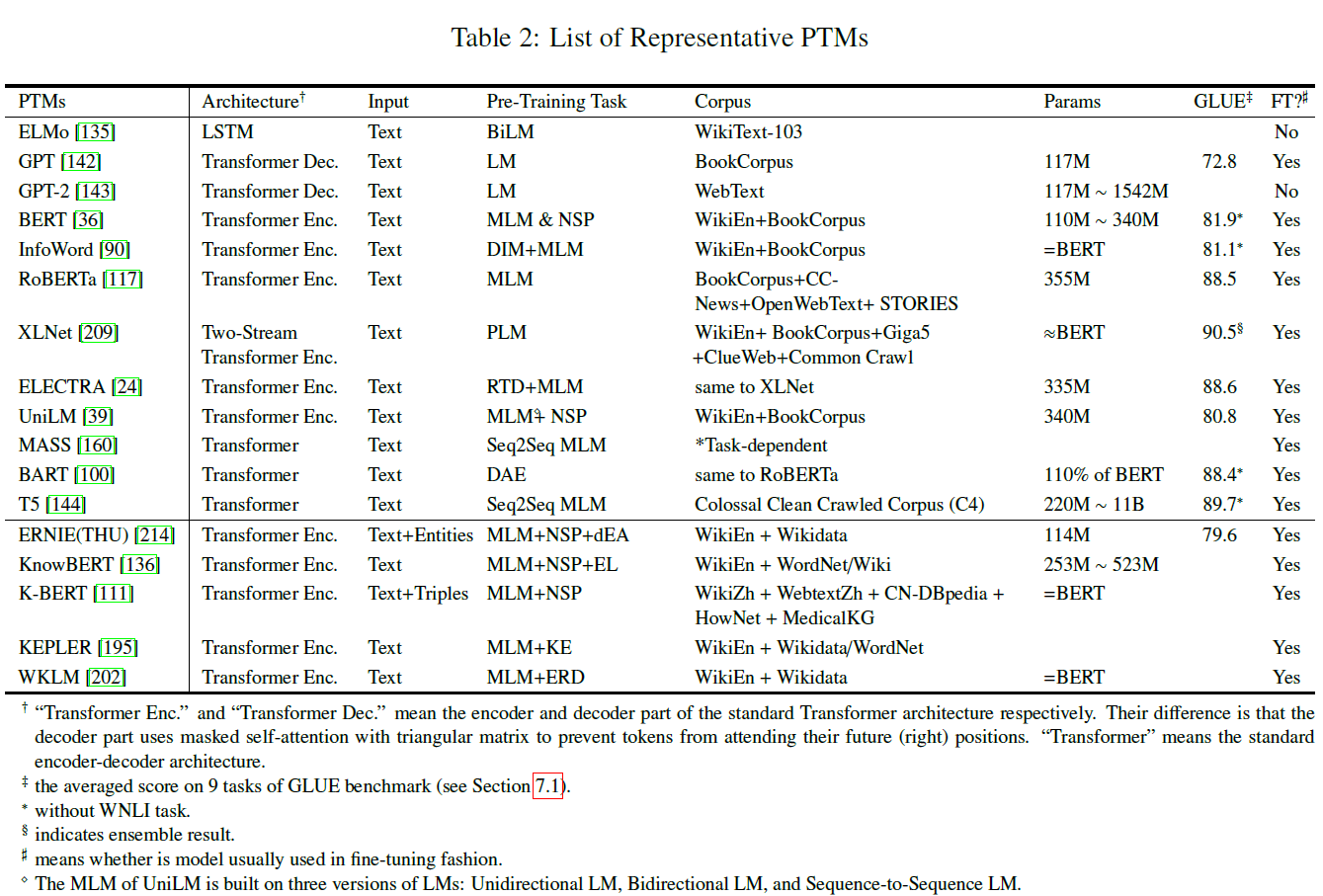

Architectures

- LSTM

- Transformer Encoder

- Transformer Decoder

- full Transofrmer

Pre-Training Task Types

Extensions

- Knowledge-enriched PTMs

- linguistic

- semantic

- commomsense

- factual

- domain-specific knowledge

- Multiligual and language-specific PTMs

- Multilingual PTMs

- cross-lingual language understanding

- cross-lingual language generation

- Language-specific PTMs

- Multilingual PTMs

- Multi-Modal PTMs

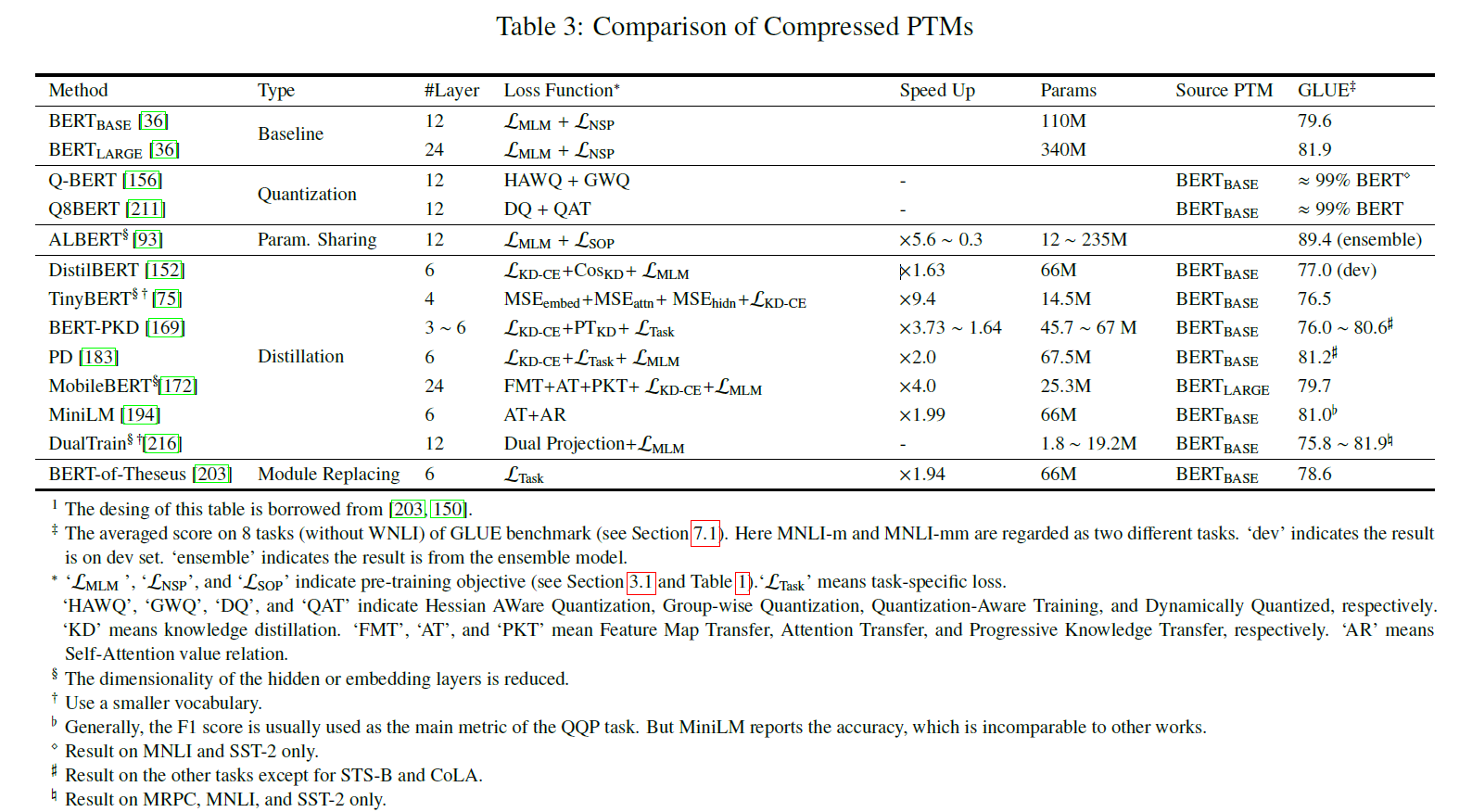

- Compressed PTMs

- model prruning: 去除不重要的参数

- weight quantization: 用比特表示参数

- parameter sharing: 类似结构共享参数

- knowledge distillation: 训练一个学会更小的学生模型学习原始模型

- distillation from soft target probabilities [DistillBERT]

- distillation from other knowledge [TinyBERT, MobileBERT, MimiLM]

- distillation fto other stuctures

- model replacing: 用更紧凑的模型取代原模型

- ...

PTMs任务

机器学习可以分为Supervised Learning (SL),Unsupervised Learning (UL),Self-Supervised Learing (SSL)

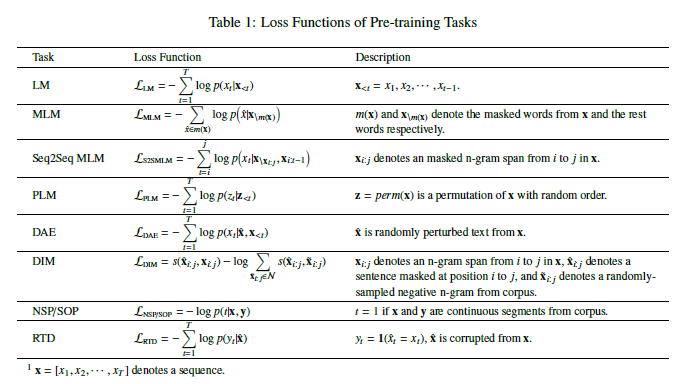

Language Modeling (LM)

Masked Language Modeling (MLM)

Seq2Seq MLM [used in MASS, T5 and benefit the Seq2Seq downstream tasks]

Enhanced MLM (E-MLM) [RoBERTa <- BERT, UniLM, XLM <- MLM, Span-BERT <- MLM, StructBERT]

Permuted Language Modeling (PLM) XLNet

Denoising Autoencoder (DAE) BART

Contrastrive Learning (CTL)

Deep InfoMax (DIM)

Next Sentence Prediction (NSP)

Sentence Order Prediction (SOP) ALBERT, StructBERT, BERTje

Others

- incorporate factual knowledge

- imporve cross-lingual tasks

- multi-model applicates

- ...

Adapting PTMs to Downstream Tasks

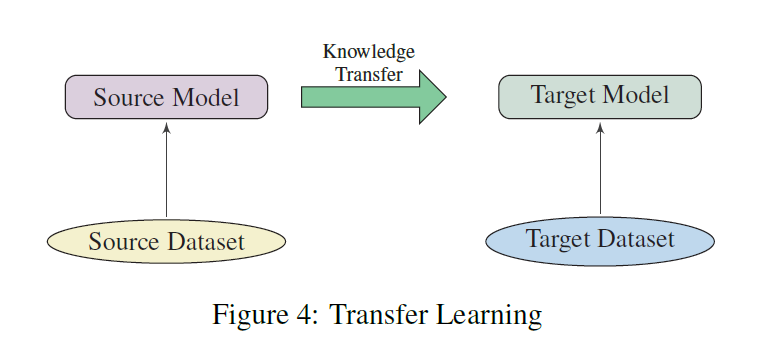

预训练好模型后,还有一个重要的问题是,如何使用PTMs。这涉及到Transfer Learning.

- 如何迁移?

- 选择合适的预训练任务,模型和语料库

- 选择合适的层(低层倾向于语法等基本信息,高层有利于用语义等任务)

- 是否需要微调(To tune or not to tune)

- feature extraction (the pre-trained parameters are frozen)

- fine-tuning (the pre-trained parameters are unfrozen and fine-tuned)

- 微调策略

- Two-stage fine-tuning

- 通过中间层/语料库进行微调

- 在目标任务上微调

- Multi-task fine-tuning

- Fine-tuning with extra adapation modules

- 原始参数固定,引入额外的可微调模块

- Others

- self-ensamble and self-distillation

- gradual unfreezing and sequential unfreezing

- Two-stage fine-tuning

PTM的应用

- General Evaluation Eenchmark (GLUE, SuperGLUE)

- Question Answering

- Sentiment Analysis

- Named Entity Recognition

- Machine Translation

- Summarization

- Adversarial Attacks and Defenses

发展方向

- Upper Bound of PTMs

- 当前预训练模型还没有达到它的上届,需要更深的网络,更大的语料库,更富有挑战的预训练任务,更高效的损失函数。目前一个比较实际的方式是在现有的软硬件基础上,设计一个有效率的模型,自监督预训练任务,优化器和训练技巧,如ELECTRA

- Architecture of PTMs

- transformer以被证实是一个比较有效的预训练结构。但是由于模型比较复杂,需要设计更加轻量的模型,如Transformer-XL。模型的设计非常困难,neural architecture search (NAS)或许是一个比较好的方式。

- Task-oriented Pre-training and Model Compression

- 不同下游任务需要PTM不同的能力。PTMs和下游任务通常有两个差异:模型结构和数据分布。

- 通常更大的PTMs对下游更有利,但模型太大,无法在低容量、低延迟设备上使用。因此需要提取特定任务的预训练知识

- 使用模型蒸馏技术,根据原PTMs构建一个更小的网络

- Knowledge Transfer Beyond Fine-tuning

- 为特定任务引入可微调模块,使PTMs可以复用

- 从PTMs中更加灵活的挖掘知识:feature extraction, knowledge distillation, data augmentation

- Interpretability and Reliability of PTMs

- 可解释性:深度模型的解释性很差,目前大多利用atteion进行解释

- 脆弱性:由于深度模型不可解释,很可能与人的认知不一致。这也导致了模型出现不和直觉的结果。使用对抗机制研究这个方向非常有前景。